AI Governance Platforms: Ensuring Ethical and Responsible AI

By Ankita Das

Artificial intelligence (AI) has profoundly impacted industries and society, transforming the way we live and work. Its automation, data analysis, and decision-making abilities drive innovation and efficiency. However, this rapid integration raises significant ethical concerns, such as bias, privacy violations, and accountability issues. To address these challenges, AI governance platforms have become crucial. These platforms provide a structured approach to ensure that AI systems are developed and deployed responsibly, aligning with ethical principles and societal values.

By implementing robust governance, organizations can mitigate risks like discrimination and misuse, fostering trust in AI technologies. In this blog, we will delve into the concept of AI governance platforms, their key components, and best practices for implementation. By understanding these elements, we can harness the benefits of AI while ensuring that it adheres to security protocols.

What are AI Governance Platforms and Why Do We Need Them?

AI governance platforms are comprehensive systems of rules, processes, and tools designed to ensure that artificial intelligence (AI) technologies are developed and deployed responsibly. These platforms align AI systems with ethical principles, legal requirements, and societal values while addressing the risks associated with their use. As AI spreads across healthcare, finance, and public services, governance needs have grown.

AI systems often inherit human biases from their training data or algorithms, leading to discriminatory outcomes. For example, the COMPAS algorithm used in judicial sentencing was found to predict higher recidivism rates unfairly for certain racial groups. This case highlights the challenges of unregulated AI decision-making. Similarly, Microsoft’s Tay chatbot demonstrated how AI can quickly adopt harmful behaviors when exposed to unmonitored data inputs.

Governance frameworks address these challenges by implementing policies, standards, and monitoring mechanisms that ensure the ethical use of AI. By balancing technological innovation with ethical safeguards, AI governance platforms help organizations avoid reputational damage and legal risks while building trust in AI systems.

Key Components of AI Governance Platforms

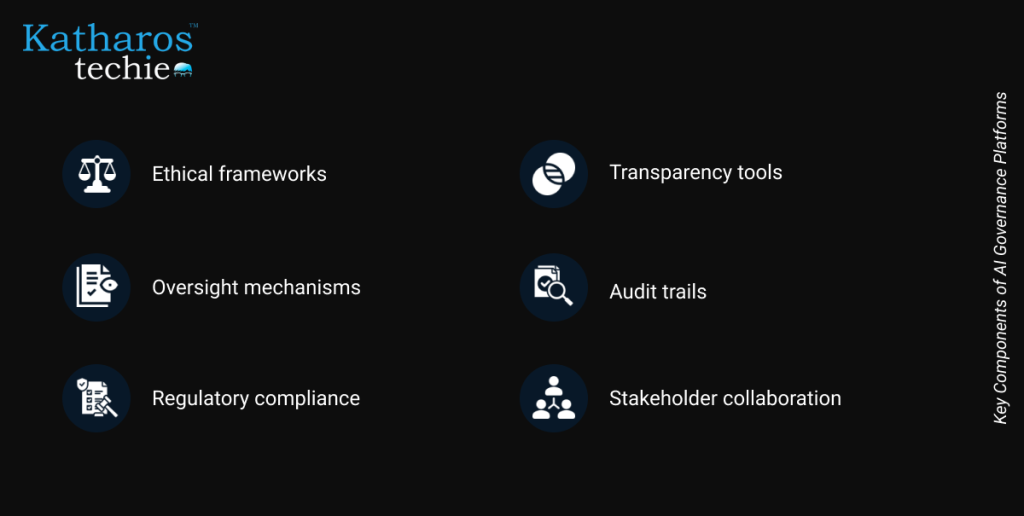

AI governance platforms are essential for ensuring that artificial intelligence systems operate in compliance with societal and regulatory expectations. These platforms integrate several critical components to provide oversight and accountability throughout the AI lifecycle.

- Ethical Frameworks

Ethical frameworks establish empathy, transparency, fairness, accountability, and safety. These principles ensure that AI systems respect human rights and societal values. For example, fairness eliminates bias in AI models, while transparency ensures transparent decision-making processes. These frameworks act as the foundation for responsible AI development and deployment.

- Oversight Mechanisms

Oversight mechanisms involve tools that monitor AI systems for bias, drift, and anomalies in real-time. Drift detection ensures that models remain accurate as data distributions change over time, while bias detection identifies discriminatory patterns in data or predictions. These mechanisms provide proactive monitoring to maintain the integrity and reliability of AI systems.

- Regulatory Compliance

Regulatory compliance frameworks ensure adherence to laws such as GDPR or industry-specific standards in healthcare or finance. They help organizations manage privacy risks, discrimination, and accountability while ensuring AI systems comply with legal requirements. This component is critical for building trust among users and stakeholders.

- Transparency Tools

Transparency tools include dashboards that provide real-time updates on model health, performance metrics, and decision-making processes. These tools enable stakeholders to understand how AI systems function and ensure that decisions are based on clear and justifiable criteria. Transparency fosters trust and facilitates better oversight of AI technologies.

- Audit Trails

Audit trails involve maintaining detailed logs of decisions made by AI systems. These logs help organizations track the reasoning behind AI-driven actions, simplifying error or bias detection during reviews. Audit trails are essential for accountability, particularly in regulated industries where compliance is mandatory.

- Stakeholder Collaboration

Stakeholder collaboration ensures the involvement of diverse groups such as developers, policymakers, ethicists, and end-users in the governance process. Incorporating diverse perspectives helps organizations align AI systems with societal values and effectively address potential risks. This collaborative approach fosters a shared understanding of ethical responsibilities in AI deployment.

Best Practices for Implementing AI Governance Platforms

Implementing an effective AI governance platform requires a strategic approach combining ethical considerations with operational efficiency. Below are best practices to guide organizations:

1. Adopt Multidisciplinary Approaches

Collaboration across technology, law, ethics, and business domains is essential to address the diverse challenges of AI governance. For instance, technologists can focus on technical feasibility while ethicists ensure alignment with societal values, creating a balanced governance framework.

2. Automated Monitoring Systems

Automated monitoring systems detect bias or performance deviations early by analyzing data streams in real-time. These tools help organizations maintain the integrity of their models by identifying issues before they escalate into significant problems.

3. Transparent Reporting

Transparent reporting involves explaining decision-making processes to stakeholders through interpretability techniques like SHAP or LIME. This practice builds trust by providing insights into how models arrive at their conclusions and ensures accountability at every stage of operations.

4. Custom Metrics Alignment

Organizations should define custom metrics that align with their goals while ensuring ethical outcomes. For example, combining fairness scores with traditional KPIs like accuracy ensures that business objectives do not compromise ethical considerations in decision-making processes.

5. Continuous Updates

Regular evaluation and updating of AI models prevent issues like data drift or outdated behavior from affecting performance. Continuous improvement ensures that models remain relevant in changing environments while adhering to evolving regulations or standards.

6. Training & Awareness

Educating stakeholders on ethical principles and governance frameworks is crucial for fostering a culture of responsibility within organizations. Training programs should cover topics like bias detection, regulatory requirements, and best practices for ethical AI use to ensure all team members understand their roles in maintaining governance standards.

By integrating these components and adhering to these best practices, organizations can build robust AI governance platforms that ensure ethical operations while driving innovation and trustworthiness in their AI initiatives.

AI governance platforms ensure that artificial intelligence is deployed ethically and responsibly across various sectors. As organizations face increasing regulatory scrutiny, investing in robust governance frameworks is essential for fostering trust and mitigating risks. By adopting these platforms, businesses can navigate the complexities of AI while driving innovation. Start your journey towards responsible AI by exploring available solutions and building a framework that aligns with your organizational values.